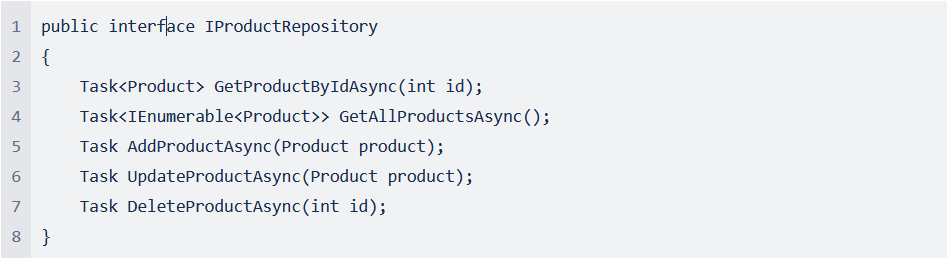

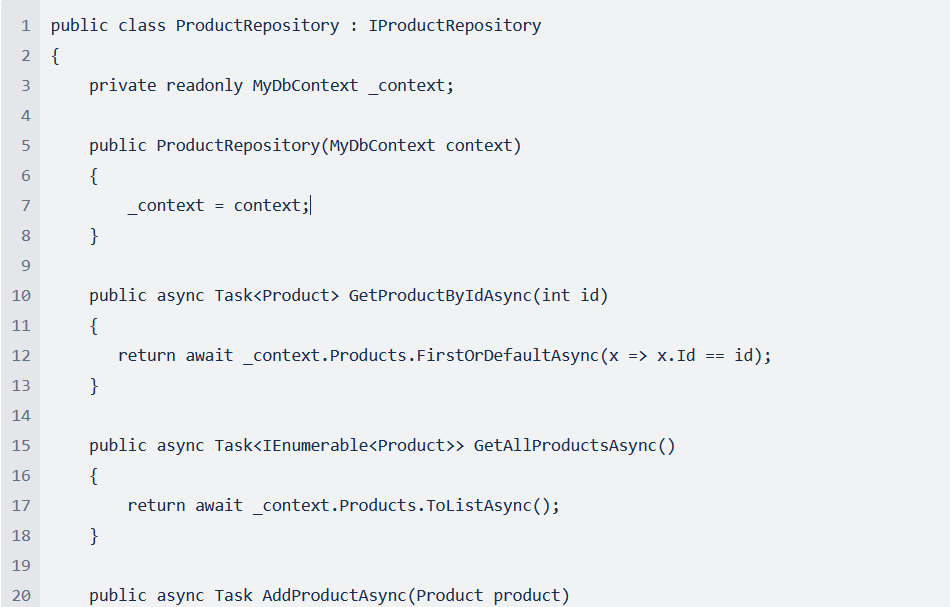

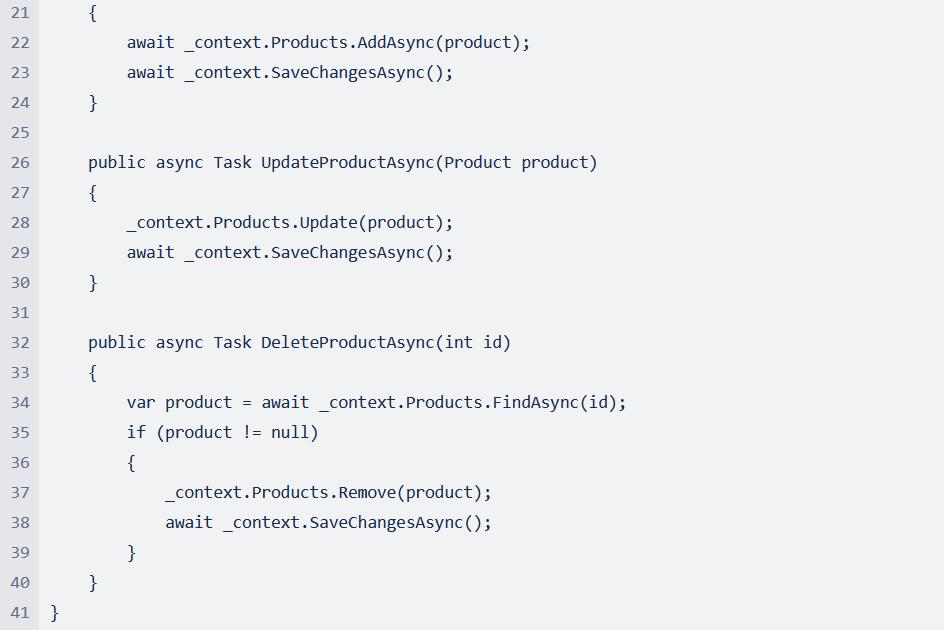

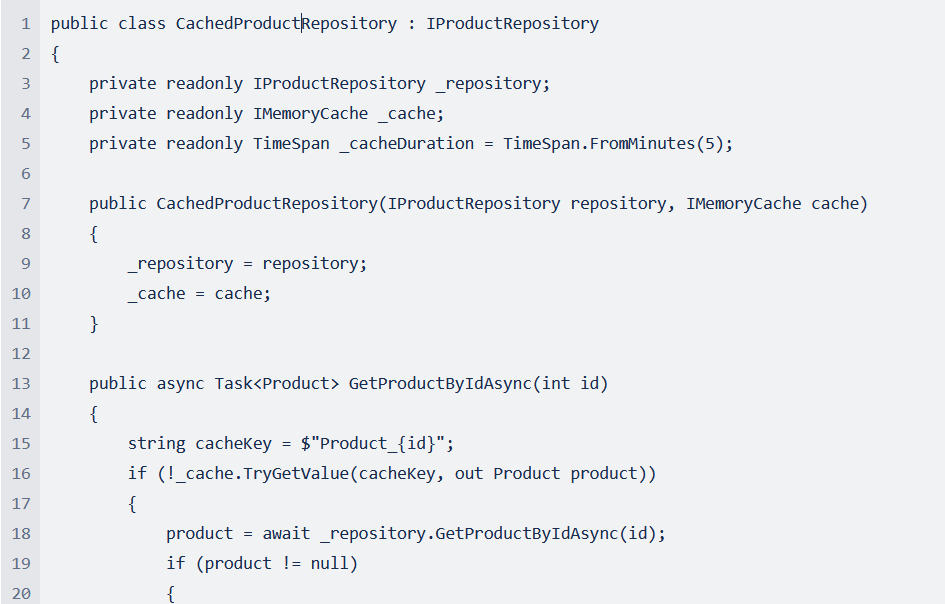

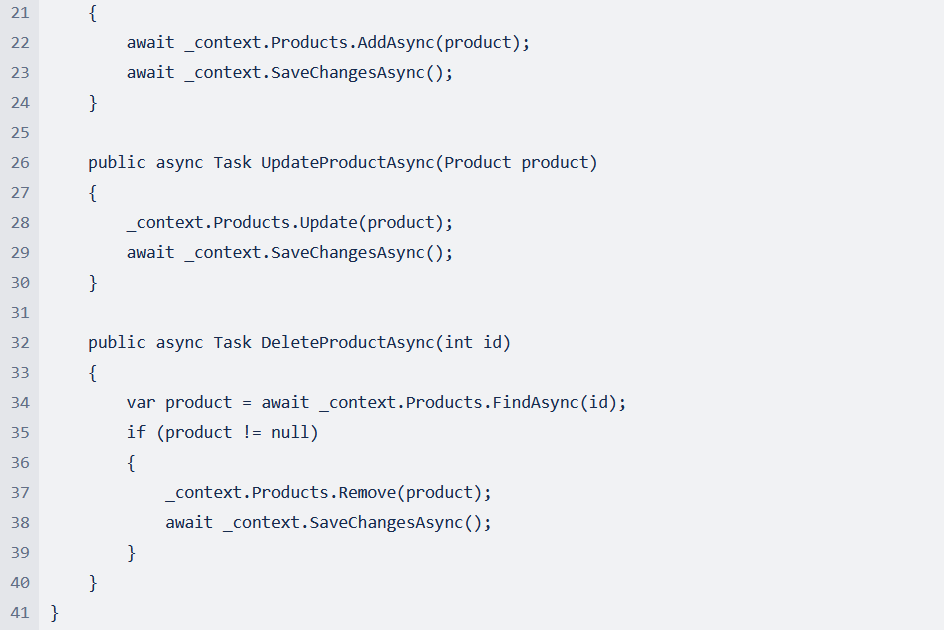

A cached repository is a design pattern to enhance application performance by storing data in a fast-access memory area known as a cache. This reduces the number of database accesses, thereby improving response times and the application’s scalability. A repository abstracts data access and provides uniform interfaces for CRUD operations (Create, Read, Update, Delete). Combining these concepts offers a powerful method for optimizing data access patterns in modern applications.

For advanced developers, cached repositories offer several advantages:

In addition to the decorator pattern for cached repositories, there are several other caching strategies:

Each strategy has specific use cases and challenges that must be carefully evaluated.

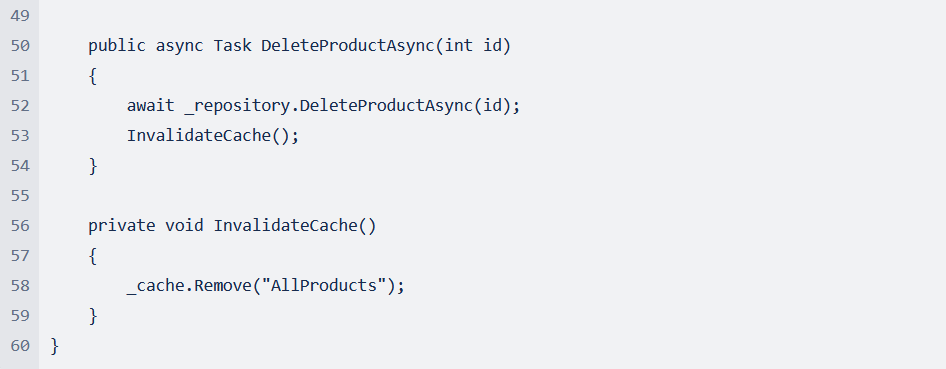

Cache invalidation and synchronization are complex topics that require special attention:

Cache Invalidation:

Time-to-Live (TTL): Set a TTL for cache entries to ensure automatic invalidation.

Event-Based Invalidation: Use events or message queues to synchronize cache invalidations in distributed systems.

Synchronization Between Cache and Database:

A comprehensive understanding and correct implementation these techniques are crucial for successfully leveraging cached repositories in demanding applications.

In summary, combined with the decorator pattern and Entity Framework Core, cached repositories offer an effective method for optimizing data access patterns. They provide significant performance benefits but require careful implementation and management to avoid pitfalls.

An interesting question was asked about the MemoyCache, which I would like to include here:

Q: It is necessary to secure the cache separately with a lock to make the repository thread-safe?

A: Normally it is not necessary to explicitly secure access to ‘_cache’ with a SemaphoreSlim or other locking mechanism, as IMemoryCache is already thread-safe.

The internal implementation of IMemoryCache ensures that read and write operations are correctly synchronized so that parallel accesses are safe.

Although IMemoryCache itself is thread-safe, there are certain scenarios in which additional protection, e.g. through a SemaphoreSlim, could be useful:

These would be, for example.

Cache stampede, avoidance of duplicate database queries or consistent cache invalidation.

If such circumstances occur in your application, you should secure access to the _cache.

If it is a “normal” application, as we often use at Macrix, it is not absolutely necessary.

Benjamin Witt

Macrix Lead Developer

Ps. For more of Ben’s publications along with exercise files and demos, go here.